Westworld Confronts What It Feels Like To Be Human

It’s obvious whether or not you will like Westworld from its very first scene. In it, an unseen man asks: “Have you ever questioned the nature of your reality?”

If you don’t care about that question, then you won’t like Westworld, plain and simple.

If, like me, and apparently a whole lot of others, you have, then Westworld has been on your mind way too often the last few months. It’s added to the dialogue that I’ve been having with my conscience ever since I hit adolescence, a conversation around those big, emotional, frustrating, often overwhelming questions. Who am I, really? What do I want? Why am I here? What is my purpose? Why do I feel this way? Because when you take away the western veneer, shootouts, naked robots, and HBO-required sex, Westworld is about what it feels like to have a conscience.

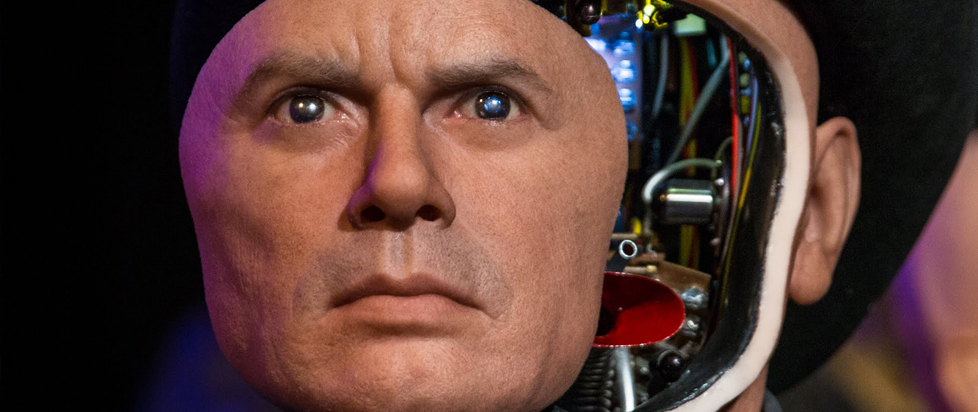

The show is centered around the titular Westworld, a massive (forums debate between 100 and 500 square miles) wild west theme park, where for $40,000 a day, you can live out your wildest cowboy fantasies. Inhabiting the park are “Hosts,” ultra-advanced AI designed to be human stand-ins, acting as sheriffs, prostitutes, farmers, ruffians, criminals, citizens, and the like. These Hosts, while nearly indistinguishable from humans, have no free will, no memories, and are incapable of harming humans beyond a minor scrape.

However, after the first few episodes, it becomes clear that the Hosts are beginning to think beyond their scripts. And while there have been many stories about AI achieving sentience, Westworld gets at what makes the singularity, at its heart, interesting. Instead of getting mired in technical discussions or overplayed arguments concerning morality, Westworld focuses instead on how having a conscience feels. Much of the shows main thrust is spent on the Hosts experiencing feelings, reeling from their own decisions, exploring memories, and yearning for a mysterious something that they do not yet know is self-purpose. This is crystal clear in the journey of the show’s (arguable) lead character, Host Dolores Abernanthy.

Spoilers for Westworld follow —

Dolores is one of Westworld’s original Hosts, made before the theme park was even open to the public. She was created to be a simple character, the local rancher’s daughter who looks pretty, loves to paint, enjoys “natural splendor,” and wishes of going someplace faraway, on a grand adventure.

By the end of the season, Dolores gets what she was programmed to “want”, but not in the way you might imagine.

From the first episode onward, she starts inching closer and closer to true sentience. She starts being able to remember, a feature purposefully omitted from all Hosts, as, by the end of the day, they often end up bamboozled, murdered, raped, captured, attached to, or tortured by the visiting humans. She goes off-script, committing actions far beyond what she was programmed to do, including killing other Hosts. Perhaps most importantly, she starts dreaming and hearing voices in her head.

These new features don’t do any favors for Dolores, quite the opposite in fact. Because of these sentient tendencies, Dolores is put through hell. She remembers the countless times she has been betrayed, killed, raped, and seen the same done to her friends and family. She goes on a quest with one of the human visitors that, despite their best efforts, only leads to more murder, more violence, and more betrayal. Her desire to go someplace faraway becomes genuine, and is met with the realization that the world (really Westworld) itself is designed to keep her stuck. Throughout the entire season, her efforts only lead to more pain and more suffering.

This whole time, we, the audience, are wondering. Does she have her own conscience yet? Or is this still just an AI reacting to new scenarios? After all, Hosts are capable of slight improvisation…

In the show’s season finale, we finally confront this question. And, like many of the shows hardest hitting moments, there’s no sex, gunfighting, or explosions.

In an empty church, Bernard, aware of new truths regarding Dolores and other Hosts’ gaining consciences, confronts his partner Dr. Ford, the chief engineer of Westworld. Dr. Ford explains that his old partner discovered what led the Hosts to gain consciences: “Suffering. The pain that the world is not as you want it be.” At the same time, downstairs, Dolores realizes her own conscience; the voices she was hearing in her head were not her code, but her own voice, guiding her.

These combined reveals hit me emotionally like a speeding truck. Westworld essentially states that the most important part of our sentience, the one unique part of any human’s life, is emotion. And not just any emotion, but a very specific feeling burdened to humankind: the pain of want.

Dr. Ford argues that what makes us human is that we suffer, not from pain or disease or injury, but from our imagination. We suffer because we can dream. And when we awaken from those dreams, and see the world is not as we dreamt it to be, it hurts. Often, it hurts too much. As for Dolores, she essential became human once she started dreaming.

By focusing nearly all of its effort into this ending, this conversation about how it feels to be human, Westworld becomes empathetic. Who doesn’t hurt from seeing the world, and not what you wish it was? Like Dolores, I want to see the beauty in the world, instead of the ugliness. I want all of the pain I feel to be worth something. I want to know that my life has meaning, that my actions have some sort of consequence on the world around me. Like her, I want a purpose, I want a journey, and I want to feel fulfilled by the end of it. The message she delivers is that we want the same things.

That is how Dolores pulls the complex narrative of Westworld together, through confronting what it feels like to be human. And if that isn’t relatable, then you have yet to have an awakening of your own.