Word Lens: Augmented Reality On Acid

It’s extraordinarily rare for a technology to emerge that’s so revolutionary, so astounding, so surreal, that we feel a visceral connection to the future. But that’s how I feel about augmented reality.

It’s extraordinarily rare for a technology to emerge that’s so revolutionary, so astounding, so surreal, that we feel a visceral connection to the future. But that’s how I feel about augmented reality.

To date, my dabbling in AR has been limited to shooting TIE fighters flying 360 degrees around me or colored numbers that hover over Manhattan streets directing me to the nearest subway line. Both approaches to AR are cool enough – as is the groundbreaking work being done by a Japanese company called Tonchidot – but a new app, Word Lens [iTunes link], is the first use of the technology to utterly blow my motherfucking mind.

And I think it’s gonna blow your mind, too.

Somehow – if I knew how I’d be making a lot more money than I do now – Word Lens can identify written language it captures through the iPhone’s built-in camera, allowing you to do a couple of rather profound parlor tricks.

By focusing the camera on printed text – say on a piece of paper, a computer screen or a billboard – Word Lens can isolate individual words, and either reverse their letters or erase them completely from the lens’ field of vision, creating a virtual world in which letters are scrambled or missing entirely.

Pretty neat, but the app goes way beyond just that.

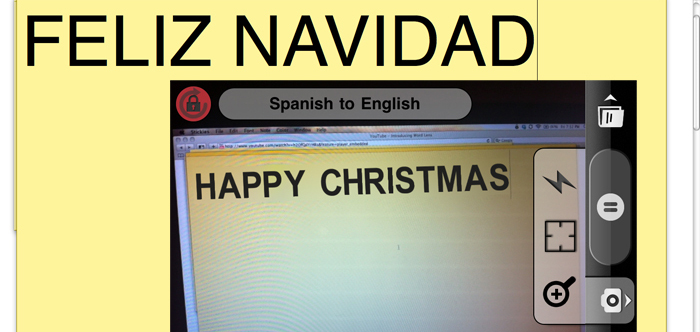

Word Lens has the ability – albeit only in the rudimentary stages – to take those words … and translate them into another language. Meaning, you can hold up your iPhone to a word in Spanish – for example, “hola” – and what you see on your iPhone screen, plain as day, is the word “hello.”

Mind blown yet?

Right now, Word Lens only does Spanish-to-English and vice versa, and its translation is far more miss than hit. But when it works – and it does work – it is nothing short of magic.

It’s the kind of application that makes you wonder how far augmented reality can take us. Word Lens is marketed at the world traveler, who’d conceivably never get lost in the bowels of a foreign train station again or fail to find something to read when all that’s handy is a local magazine. Tonchidot, the Japanese firm I mentioned earlier, wants users tagging the real world, so you could find hidden messages in everyday objects by looking through your phone, or walk into a mall and instantly know where the item you want can be found, and what the price is.

But me, I think even bigger. Will we, one day, be able to walk into a bar, look through a lens, and instantly see the relationship status and/or level of intoxication of every last woman in the room?

In that case, we might even end up having something blown other than our minds.

Here’s the Word Lens video demo:

Matt Marrone crawled into bed last night with his iPhone and read Spanish poetry on his iPad using Word Lens. Doesn’t someone, anyone, have a fucking sister around here? Follow him on Twitter @thebigm.